Expert Thinking and AI (Part 1)

Cover Image by cottonbro studio from Pexels

By Althea Need Kaminske

Note: To the best of my knowledge I did not use generative AI to write this post. Any mistakes or insights are my own.

AI is big right now. It’s been big for a while, but it seems to be more and more aggressive in the educational space so I can longer just ignore it. I know, this was probably irresponsible of me as a cognitive psychologist who is deeply interested in learning and education. In retrospect, I think I was hoping that if I politely ignored it, it might go away. But it did not take the hint. Now, I have to patiently explain why I am not interested. Don’t get me wrong, I don’t think AI is the downfall of society or anything. I have the firm belief that AI is a tool and as such it is neither inherently good nor bad. As a tool it can be used or misused. I just have the unsettling suspicion that what is being labeled as AI isn’t always AI, at least not in the way you think it is, and even if it is AI, it might not always be the right tool for the job.

What is Artificial Intelligence?

Artificial Intelligence is many things. As a field of study, Artificial Intelligence seeks to both better understand human cognition through computer models and to improve task-based computer models (that is, where the goal is to improve performance on a task and not necessarily to model how a human would perform on that task). Artificial Intelligence is in many ways a sister-discipline to cognitive psychology which also seeks to better under human cognition. Both fields are considered part of the broader interdisciplinary field of cognitive science which is informed by Philosophy, Linguistics, Anthropology, Psychology, Artificial Intelligence, and Neuroscience (1,2). Both came about in the 1950s and 60s, and while we can quibble about when, exactly, Cognitive Psychology became its own sub-discipline of psychology, the general consensus is that the field of Artificial Intelligence started in the summer of 1956 at the Dartmouth Summer Research Project on Artificial Intelligence (1).

Cognition, as it turns out, is pretty complex. Therefore, the study of Artificial Intelligence is broken into several different sub problems. The subproblems look like a mirror held up to a cognitive psychology textbook: reasoning & problem solving, knowledge representation, planning & decision-making, learning, natural language processing, perception, etc. If you can ask how humans do it, you can also try to build a model of it and ask how a machine might do it.

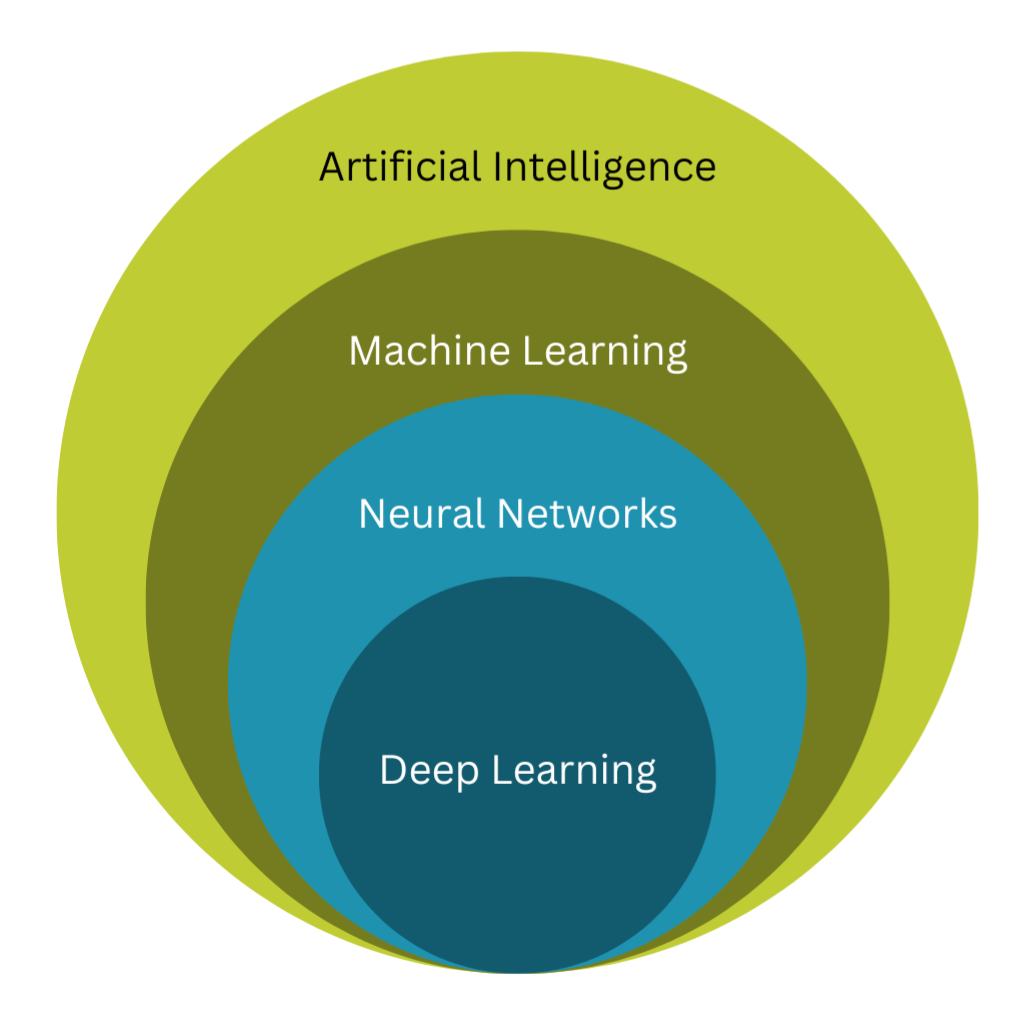

Deep learning is type of neural network, which is in turn a type of machine learning, which is in turn a type of Artificial Intelligence. There are, of course, many other subfields and types that can be combined in different ways to create AI tools, but this AI avocado demonstrates the basic relationship: Not all AI is deep learning, but all deep learning is AI. (image by Althea Kaminske)

There are many different AI tools used to study and attempt to solve these different subproblems (note: to distinguish between Artificial Intelligence as a field of study, and the more common use of AI as a tool I’m using AI to only refer to the tools). Some different types of AI that you may have heard of include machine learning, neural networks, deep learning (3), and generative pre-trained transformations (GPT) (more on this in the section below). Machine learning is a subfield of Artificial Intelligence which gives machines the ability to learn how to solve a problem or complete a task without being explicitly programmed (4). Again, in many ways this is a sister-discipline to the study of learning within cognitive psychology. At the most basic level machine learning involves algorithms and heuristics, reasoning statements, and models of problem solving. These simpler tools can be combined in different ways to make tools that learn and improve over time. That is, they do not have to be preprogrammed with precise instructions. For example, Google’s search engine was, for a time, essentially just a fancy algorithm. Algorithms are a set of rules or instructions that are followed to give a precise result. If you follow a cooking recipe exactly (measuring out your flower using a precise scale, having a consistent oven temperature, etc.) you should get the same result every time. If your cooking looks more like cooking with however much garlic or butter your heart tells you, you’re no longer following the algorithm and instead following more of a heuristic (mine is that doubling the garlic in a recipe will typically result in a better recipe). For a long time, Google preferred to use algorithms instead of machine learning for its search results because the algorithm followed a set of rules that engineers could easily modify and control (5). Machine learning, or AI, was initially used in Google searches to help it search for phrases that the original algorithm may not have been optimized for, i.e., new searchers the system had not seen before (5). It is adaptive and useful, but allows for less control and oversight.

Machine learning, like the study of human learning, is kicked up a notch and given another layer of complexity when we model learning using neural networks. A basic neural network looks a lot like simple systems within the nervous system. The most basic model includes inputs, outputs, and a hidden layer. The input layer takes in information and sends that forward to a hidden layer which does some processing. The hidden layer then feeds that information forward to the output layer which determines what response to choose. The level of complexity, and ability to solve problems, is kicked up a notch again when we add more hidden layers in deep learning. More hidden layers allow for more complex processing.

Neural networks may seem intimidating, but the basic idea is pretty simple! Picture of Neural Networks for Babies by Chris Ferrie and Dr. Sarah Kaiser. On the left is a depiction of an input layer that takes in information about a picture of a starfish. On the right is a depiction of an output layer that chooses between several possible types of star fish.

The term AI or Artificial Intelligence can be used to refer to a number of different fields of study and tools. AI can be a variety of different tools from algorithms to recommend what to watch next or help you search the internet, to deep learning needed to operate self-driving cars. While many AI tools use algorithms as part of their toolset, generally when we refer to AI or machine learning the implication is that AI tool is learning in some capacity using neural networks or deep learning. When a company or product markets itself as using AI, that could mean a variety of things.

Talking To Machines? Now they Talk Back!

A very topical issue of the Journal of Applied Research in Memory and Cognition came out last December with a focus on the use of generative chatbots and human cognition. The target article “Expert Thinking With Generative Chatbots” by Imundo and colleagues (7) provides an overview of what generative chatbots are and provides a framework for understanding how our thinking is affected by using these chatbots. I’ve already outlined some basics of AI in the overview above, but since this article deals specifically with ChatGPT, one of the more popular chatbots, it’s worth going over Imundo et al.’s summary of how generative chatbots work.

Like other AI tools we’ve discussed so far, there are several different types and iterations of chatbots. One of the early types of chatbots was simple rule-based chatbots. They combined natural language processing (which turns written or spoken language into data points that the machine can use) with if-then rules to respond to human language. These chat bots could respond to questions like, “Do you like tea?” with simple ‘yes’ or ‘no’ responses and a bit of flourish: “Yes, I like tea. What about you?” The next iteration of chatbots were retrieval-based chatbots that combined machine and deep learning techniques with response banks to create more flexible and conversation like interactions (8). The AI can recognize a general topic and use machine learning to improve its ability to match the message to a response. These chatbots can respond to “Do you like tea?” with slightly more elaborate answers: “Yes. Tea is one type of beverage enjoyed by people worldwide. What other beverages do you like to drink?”

Image by Photo by MYKOLA OSMACHKO from Pexels

More recent iterations of chatbots use ‘transformers’ (which you might recognize from the full name for GPT: generative pre-trained transformations). Transformers are deep neural networks that let the model assign weights for information based on importance (7) Combined with large data sets, transformers allow for the development of large language models that process neural networks with billions of parameters. Very fancy, very complex. These chatbots might make suggestions about how to best brew tea.

The complexity of these generative chatbots allows them to respond in more nuanced and conversation-like ways. Their performance can be improved even further by a couple of fine-turning methods. One is through reinforcement learning from human feedback. Humans rate the response of the chatbot which creates a reward structure for the chatbot. When it generates responses it can then select the one that its algorithm predicts will be most highly rated by a human. Another fine-tuning method is retrieval-augmented generation, which allows the chatbot to respond using novel information that it was not trained on (7). If asked “Do you like tea?” these chatbots might be able to not only discuss the finer points of brewing tea, but also summarize reviews of your local tea shops.

Another factor that affects how well chatbots can respond to your tea needs is the data set it was trained on (4). Recall that these tools work by learning. They are given a set of data, some initial parameters and instructions, and trained to figure out the weights and relationships between different words and phrases. A chatbot might learn that there are different types of teas commonly mentioned together, and thus when you ask what types of teas there are, it will provide you with the types commonly mentioned together. Without careful thought about where it’s getting its information, or how its responses are being rewarded through reinforcement learning from human feedback, the chatbot is simply providing you with what the most popular or most associated response. It is not necessarily weighing its response based on reputable or expert sources. Thus, chatbots perform best when they are designed for a specific purpose; when they are trained on a carefully designed data set, and are fine-tuned for their specific purpose.

As the level of complexity of AI tools increases, so does the power needed to run those tools. For example, while Google’s search engine was originally a fancy algorithm, now every Google search automatically generates an AI Overview, an AI tool that uses generative AI to create a summary of search results. This addition of a more complex AI tool comes at a cost. The International Energy Agency’s 2024 Electricity report states that “Search tools like Google could see a tenfold increase in their electricity demand in the case of fully implementing AI in it. When comparing the average electricity demand of a typical Google search (0.3 Wh of electricity) to OpenAI’s ChatGPT (2.9 Wh per request), and considering 9 billion searches daily, this would require 10 Twh of additional electricity a year”. It takes roughly 10x more energy to use ChatGPT to answer a question than to Google it. If you’ve heard more about increased needs for energy and data centers, the increased use of AI is one of the driving factors (6).

Taken as a whole, these chatbot tools can analyze an astounding amount of data and generate real-time, conversation-like responses. These conversation-like responses can feel not just human-like, but like you’re talking to an actual expert. However, recent research suggests that while chatbots may be very good at making us feel like they are experts, they are still prone to egregious and bizarre errors (7) (the website AI Weirdness documents “The strange side of machine learning”).

In Part 2 I’ll go over what makes an expert - both human and artificial, how expertise is developed, and how AI tools can help or hurt learning and expertise development.

References

Gardner, H. (1985). The mind's new science: A history of the cognitive revolution. Basic Books.

Miller, G. A. (2003). The cognitive revolution: A historical perspective. Trends in Cognitive Sciences, 7(3), 141–144. https://doi.org/10.1016/S1364-6613(03)00029-9

National Institute of Biomedical Imaging and Bioengineering. (n.d.). Artificial Intelligence (AI). https://www.nibib.nih.gov/science-education/science-topics/artificial-intelligence-ai

Sara Brown (2021, April 21). Machine learning, explained. https://mitsloan.mit.edu/ideas-made-to-matter/machine-learning-explained

Cade Metz (2016, February 4). AI is transforming Google search. The rest of the web is next. WIRED. https://www.wired.com/2016/02/ai-is-changing-the-technology-behind-google-searches/

International Energy Agency. (2024). Electricity 2024: Analysis and forecast to 2026. https://iea.blob.core.windows.net/assets/6b2fd954-2017-408e-bf08-952fdd62118a/Electricity2024-Analysisandforecastto2026.pdf

Imundo, M. N., Watanabe, M., Potter, A. H., Gong, J., Arner, T., & McNamara, D. S. (2024). Expert Thinking with Generative Chatbots. Journal of Applied Research in Memory and Cognition, 13(4), 465-484. https://doi.org/10.1037/mac0000199

Adamopoulou, E., & Moussiades, L. (2020). An overview of chatbot technology. In I. Maglogiannis, L. Iliadis, & E. Pimendidis (Eds.), Artificial intelligence applications and innovations, AIAI 2020: IFIP advances in information and communication technology (Vol. 584, pp. 373-383). Springer. https://doi.org/10.1007/978-3-030-49186-4_31