GUEST POST: Cumulative Compensatory Assessment in Engineering Education

By Peter Verkoeijen & Anton den Boer

Dr. Peter Verkoeijen is associate professor in educational psychology at the Department of Education, Psychology, and Child Studies at Erasmus University Rotterdam, The Netherlands. He is also professor of applied sciences (“lector” in Dutch) in the Brain and Learning research group (“lectoraat”) at Avans University of Applied Sciences in Breda. Peter has many year of experience in teaching and coordinating courses at a university bachelor and master level. In his research, he mainly focusses on investigating the theoretical mechanisms underlying spacing and retrieval practice effects and on the practical applications of these effects in the classroom. Peter tweets at @Jverkoeijen.

Dr. Anton den Boer is lecturer at the Department of Mechanical Engineering at Avans University of Applied Sciences. He is also chairman of first-year education committee. Anton studied mechanical engineering and mechanical medical technology at the Eindhoven University of Technology. In his Ph.D. thesis he described the influence of Marangoni convection on heat transfer. Anton takes part as a researcher in the Brain and Learning research group. This fits best with his interest in evidence-based education. With his research he wants to study which education interventions help students to attain higher level of achievement and motivation.

More information about Peter and Anton can be found at https://www.egsh.eur.nl/people/peter-verkoeijen/ and http://www.avans.nl/onderzoek/expertisecentra/stand-alone-lectoraten/lectoraten/brein-en-leren/deelnemers/brein-en-leren/anton-den-boer (Dutch only)

Procrastination – that is, delaying the start or completion of intended work – is extremely common among higher education students (1). The situation in Anton’s Materials Science 1 course, (part of the first-year Engineering curriculum at Avans University of Applied Sciences), is a point in case. Students in this course tended to postpone their learning activities until just before the end-of-course assessment. To Anton, this academic procrastination was problematic because it reduced the effectiveness of his instruction. Specifically, the classes and lessons scheduled in the seven-week Materials Science 1 course feature tasks and exercises designed to produce meaningful knowledge (e.g., (2), (3)). However, Anton noted that these were considerably less worthwhile when students came unprepared. To prevent this negative effect of academic procrastination, we designed an intervention that should promote retrieval practice and spaced study, and we assessed its impact on academic performance in a randomized field experiment. Here, we report on the nature of the intervention and the outcomes of our experiment.

One approach to battling procrastination is to intersperse summative assessments throughout a course (e.g., (4)). Recently, a new variant was proposed by Kerdijk and colleagues (5) in the form of cumulative compensatory assessment. Under this approach, several summative assessments are administered during a course. Most important, however, is that each assessment covers all previous course content that has been offered until that point (that is, all these assessments are cumulative). Due to this accumulation, students are encouraged to test themselves repeatedly in a distributed manner, thereby promoting retrieval practice and spaced repetition, both of which are known to enhance learning (e.g., (6); (7); (8); (9); (10)). Furthermore, in cumulative compensatory assessment, the scores of multiple assessments are combined to a single score that weighs in for the final course grade. As a result, students can compensate for poor performance on one test with better performances on others, which we assumed would help students maintain their study efforts at a high level throughout the entire course.

What we did

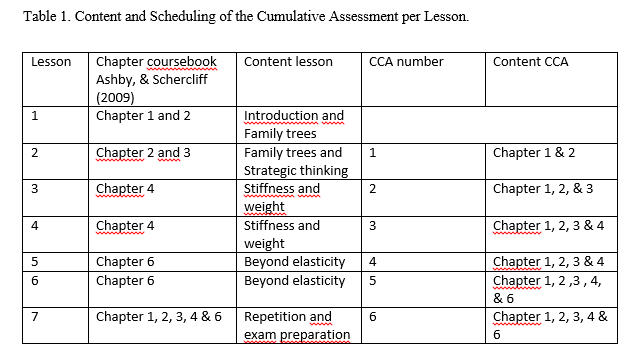

We worked together to design a cumulative compensatory assessment intervention and a research plan for the field experiment, which Anton and his colleague Alain implemented during the 2016-2017 Materials Science 1 course. The course was conducted over seven weeks, and each week a 2-hour lesson was scheduled in which the teacher introduced new course content and students worked on various assignments related to that content. The rest of the course load – approximately 42 hours – had to be spent on self-study. All 105 students across four classes and two teachers participated in the field experiment and they were randomly assigned to the cumulative compensatory condition or the control condition. The students in the cumulative compensatory assessment condition (CCA in Table 1) took six cumulative compensatory assessments during the course according to the schedule in Table 1.

Each cumulative compensatory assessment consisted of 10 questions (see Figure 1 and 2 for examples) and these were aligned with the questions on the end-of-course exam in terms of the knowledge covered as we as skills and difficulty.

The only difference between the assessments during the course and the end-of-course exam was in the the test items: free response and short answer questions on end-of-course exam and various forms (such as “fill in the blank”, “jumbled sentence”, “short answer” and “true/false”) on the cumulative compensatory assessments. The administration of each cumulative compensatory assessment was computer-based, and immediately after the test student received their score on a 10-point scale. Furthermore, the teacher (either Anton or his colleague) explained the step-by-step solution to each question. The mean score of the best 5 cumulative compensatory assessments counted for 30% in the final course grade; the remaining 70% was determined by the grade on the end-of-course exam. Students in the control condition were allowed to take the cumulative compensatory assessments as well so that they could monitor their own learning process. However, for these students these results did not add to the final course grade. All students took the end-of-course exam about one week after the seventh lesson. Furthermore, after 12 weeks, students took a delayed test on Materials Science 1 at the start of the follow up course, i.e., Materials Science 2. This delayed tests were comparable to the cumulative compensatory assessments administered during Materials Science 1 in content and response format.

What we found

On the qualitative side, Anton and Alain noted that students in the cumulative compensatory assessment condition were better prepared for their lessons and seemed to be more motivated and active during the lessons. Furthermore, in the control group, the number of students attending the non-compulsory cumulative compensatory assessment lessons decreased substantially during the course. Independent of the condition, students who attended the cumulative compensatory assessment lessons were very positive about the feedback they received from taking the cumulative compensatory assessment.

On the quantitative side, we found that the students in the cumulative compensatory assessment condition did particularly well with a mean score of 7.8 (10-point scale, Sd = 0.7) on the best 5 cumulative compensatory assessments. On the end-of-course exam, students in the cumulative compensatory assessment condition performed slightly better than their peers in the control condition, but the difference was small and not statistically significant. By contrast, we found quite a large and significant cumulative compensatory assessment advantage on the delayed test. Table 2 shows the relevant descriptive statistics (note that CCA refers to “cumulative compensatory assessment”). At the point of the delayed test, about 90 students were still enrolled in the program. However, it is clear that not all of them took the delayed test and that the lack of attendance was largest in the cumulative compensatory assessment group. To rule out selective drop-out, we compared the Materials Science 2 performance of students who took the delayed final test to those who did not take it. Performance was comparable across groups, so we can rule out an obvious confound that predominantly high performing students took the delayed test.

What we learned

Students and teachers (Anton and Alain) were positive about the use of cumulative compensatory assessment. In addition, when we share our findings with colleagues they are enthusiastic about the field-experiment approach, which can be considered a nice example of John Hattie’s visible learning, and about the results on the delayed test (11). The latter may indicate that it takes some time before the positive effect of cumulative compensatory assessment becomes apparent. However, colleagues sometimes also express concerns about the summative nature of the cumulative compensatory assessment. They worry that cumulative compensatory assessments may lead to learning and teaching to the test and that it may lower the self-esteem of students struggling with the course content. Furthermore, colleagues fear that cumulative compensatory assessment comes with high bureaucratic costs such as grade administration. Considering these concerns, we are currently conducting a new pre-registered experiment comparing formative cumulative compensatory assessment to summative cumulative compensatory assessment, which we used in the present experiment, in terms of their effects on study time, perceived competence, and immediate and delayed performance. The results of this study may be interesting input for a future blog post :-)

References

(1) Steel, P. (2007). The nature of procrastination: a meta-analytic and theoretical review of quintessential self-regulatory failure. Psychological Bulletin, 133, 65-94.

(2) Fiorella, L., & Mayer, R. E. (2015). Learning as a generative activity: eight learning strategies that promote understanding. New York: Cambridge University Press.

(3) Fiorella, L., & Mayer, R. E. (2016). Eight ways to promote generative learning. Educational Psychology Review, 28(4), 717-741.

(4) Wesp, R. (1986). Reducing procrastination through required course involvement. Teaching of Psychology, 13, 128–130.

(5) Kerdijk, W., Cohen-Schotanus, J., Mulder, B. F., Muntinghe, F. L. H., & Tio, R. A. (2015). Cumulative versus end-of-course assessment: effects on self-study time and test performance. Medical Education, 49, 709-716.

(6) Carpenter, S. K. (2012). Testing enhances the transfer of learning. Current Directions in Psychological Science, 21, 279-283.

(7) Delaney, P. F., Verkoeijen, P. P. J. L. & Spirgel, A. (2010). Spacing and testing effects: A deeply critical, lengthy, and at times discursive review of the literature. Psychology of Learning and Motivation, 53, 63-147.

(8) Dunlosky, J., Rawson, K. A., Marsh, J. E., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14, 4 -48.

(9) Roediger, H. L. & Karpicke, J. D. (2006). The power of testing memory: Basic research and implications for educational practice. Perspectives on Psychological Science, 1, 181-210.

(10) Rowland, C. A. (2014). The effect of testing versus restudy on retention: a meta-analytic review of the testing effect. Psychological Bulletin, 140, 1432-1463.

(11) Hattie, J. (2012). Visible learning for teachers: Maximizing impact on learning. New York, NY: Routledge.