Is Intuition the Enemy of Instruction? (Part 2)

By: Yana Weinstein & Megan Smith

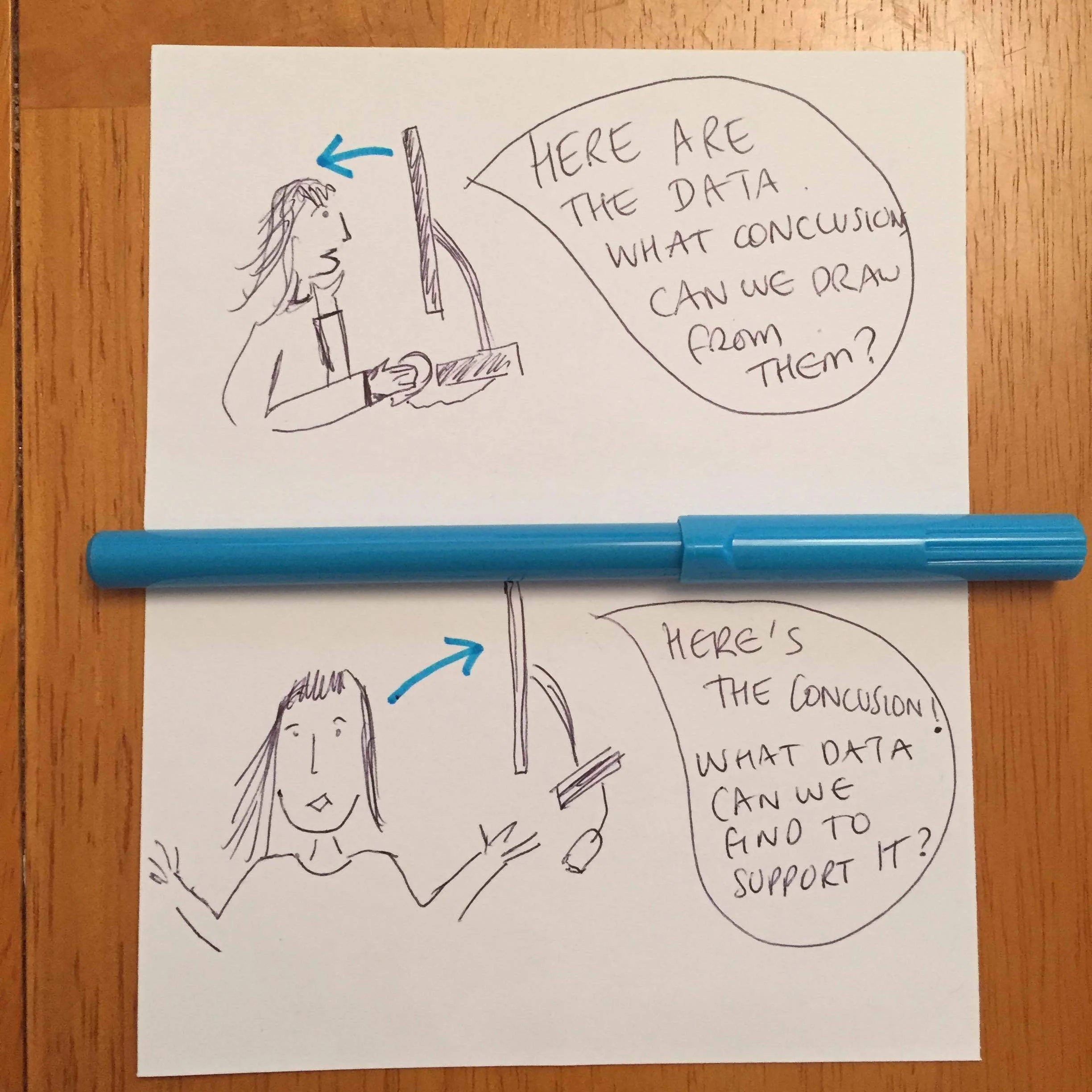

The other day, we discussed how our intuitions aren’t always accurate when it comes to our learning, or the learning of our students. The second big problem arising from reliance on intuition, which we’ll discuss today, is confirmation bias. Confirmation bias is the tendency for us to search out information that confirms our own beliefs, or interpret information in a way that confirms them (1).

How does this affect instruction and learning? Well, once we adopt a belief about what produces a lot of learning, we tend to look for examples that confirm our belief. So, imagine that you’re a firm believer in learning styles because you feel like you’ve experienced improvement in your students when you adapt your teaching to their learning style. Then a party-pooper like us comes along, and tells you learning styles are a myth. You decide to see for yourself whether what they’re saying is true. What would you Google: “evidence for learning styles” or “evidence against learning styles”?

Although no study to our knowledge has directly tested the above research question (and now we kinda want to do it!), research from other domains suggests that people are more likely to look at confirmatory than contradictory evidence. For example – and very relevant to current affairs! – in one study conducted in the run up to the 2008 US elections, participants browsed a specially designed online magazine. Their behavior was recorded in terms of which articles they chose to read on topics such as abortion, health care, gun ownership, and the minimal wage, and also how long they spent on each article. When given freedom to explore the magazine, participants generally clicked more and looked longer at political messages that were consistent with their own beliefs (though we should note that there were some fascinating interactions with other variables such as partisanship and level of news consumption that are beyond the scope of this post) (2).

If we believe in something, we are also more likely to notice and remember examples that support our belief than to notice and remember examples that do not. So, if we believe in learning styles, we are also more likely to notice times when we think we see learning styles working, and forget about the times when it doesn’t appear to work. For example, when Johnny doesn’t get your verbal description, but finally the light bulb lights up when you show him a diagram, we think, “Ah hah! There it is!” In reality, was it learning styles? Or just that he got a second presentation in a different format? What if the two were reversed? What about times when a diagram isn’t as helpful?

The problem with faulty intuitions and biases is that they are notoriously difficult to correct (3). Instructing people that these biases exist has limited success (4). Somewhat more effective is a “consider-the-opposite” exercise, where people are asked to list reasons for why their opinion might not be true, before seeking out additional information on the topic (5). We’re curious to know – how often do teachers have an intuition about how students are learning in the classroom, then sit down to write out the reasons why they may be wrong? It might be an interesting exercise to try.

And by the way, just because we research and teach about these biases, doesn’t mean we’re immune to them, either! Take the story about the students who come to our offices from our last blog post – it may be that we’re conveniently forgetting all those other students who came to us complaining that they’d failed after diligently following instructions to practice retrieval. Does conceding this point reveal us to be rampant hypocrites? Not really, we hope. Instead, we hope that there’s a way to acknowledge that we’re all humans, who go about our daily business making imperfect choices and judgments.

Science acknowledges human bias, and constantly tries to combat it. The goal of science is to try to disprove ideas, not prove them. In fact, whenever we see the word “prove,” we immediately become skeptical. (Think, “this shampoo is proven to make your hair softer!” – or worse, “proven success on your SATs with this app”. Does everyone immediately think, surely, they are just trying to sell something? We do.) When scientists all over the word are working to disprove (or reject) theories, a lot of useful information is generated. Take learning styles, which has been disproven many, many times. That’s a concept we can safely say is not worth our time and money. But when scientists keep testing the null hypothesis (that a given strategy produces no more learning than a control) and evidence continues to support the notion that the learning strategy produces learning? Now we can be far more confident! If we can all agree to start acknowledging our human flaws, and mindfully look for evidence that has been generated rather than relying upon intuition, maybe we’d help more students actually learn rather than get seduced by something that feels like learning but isn’t at all.

(1) Nickerson,R. S. (1998). Confirmation bias; A ubiquitous phenomenon in many guises. Review of General Psychology, 2, 175–220.

(2) Knobloch-Westerwick, S., & Kleinman, S. B. (2012). Preelection selective exposure confirmation bias versus informational utility. Communication Research, 39(2), 170-193.

(3) Pasquinelli, E. (2012). Neuromyths: Why do they exist and persist? Mind, Brain, and Education, 6(2), 89-96.

(4) Fischhoff, B. (1982). Debiasing. In D. Kahneman, P. Slovic, & A. Tversky (Eds.), Judgment Under Uncertainty: Heuristics and Biases. Cambridge: Cambridge University Press: 422 – 444.

(5) Mussweiler, T., Strack, F., & Pfeiffer, T. (2000). Overcoming the inevitable anchoring effect: Considering the opposite compensates for selective accessibility. Personality and Social Psychology Bulletin, 26(9), 1142-1150.